Fast file transfer:

TCP vs UDP

TCP vs UDP and which is the best option for fast file transfer? With the continuing explosion in data creation, companies are having problems moving large sets of data – gigabytes in size. While all variations of FTP can deliver large volumes of data, distance and poor internet connection lead to latency and packet loss.

This blog post looks at two options for fast file transfer: TCP vs UDP. We look at the benefits and drawbacks of each, and consider solutions for your fast file transfer requirements.

TCP vs UDP

No matter whether you use TCP or UDP, data is broken into packets when being sent to the receiving computer.

TCP

TCP (Transmission Control Protocol) transfers data in a carefully controlled sequence of packets. As each packet is received at the destination, an acknowledgment is sent back to the sender. If the sender does not receive the acknowledgment in a certain period of time, it simply sends the packet again. To protect the sequence, further packets cannot be sent until the missing package has been successfully transmitted and an acknowledgment received.

TCP is the underlying network communication protocol used in all standard MFT protocols.

With poor quality networks, it is possible for the original packet to be received intact but the acknowledgment packet to be either corrupted or lost in transmission. This would cause the whole packet to be resent unnecessarily, having an impact on transmission times. In addition, not all bandwidth is used up early in the cycle, and the rate of transmission is dictated by how much data gets through until failures start to occur.

Some things to consider for TCP:

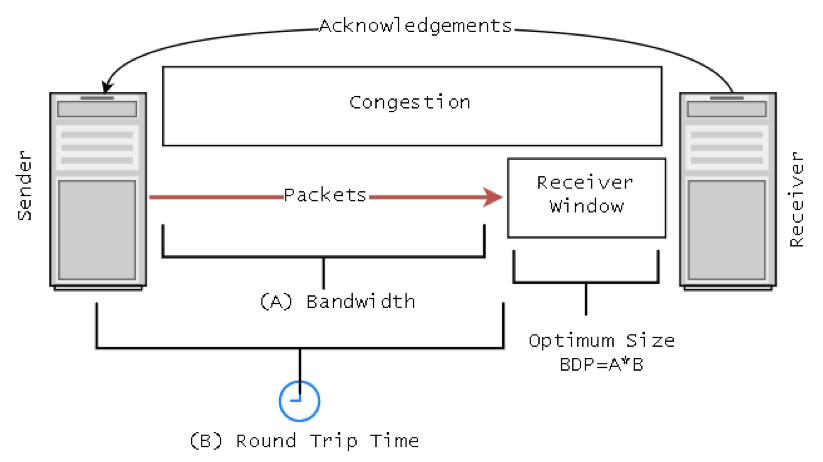

Deliverability over speed / Calculating the Bandwidth Delay Product

This emphasis on guarantee rather than speed brings with it a certain degree of delay, however, we can see this by using a simple ping command to establish the round trip time (RTT) – the greater the distance to be covered, the longer the RTT. The RTT can be used to calculate the Bandwidth Delay Product (BDP) which we will need to know when calculating network speeds. BDP is the amount of data ‘in-flight’ and is found by multiplying the Bandwidth by the delay, so a round trip time of 32 milliseconds on a 100Mbps line gives a BDP of 390KB (data in transit).

Window Scaling

The sending and receiving computers have a concept of windows (‘views’ of buffers) which control how many packets may be transmitted before the sender has to stop transfers. The receiver window is the available free space in the receiving buffer; when the buffer becomes full, the sender will stop sending new packets. Historically, the value of the receiver window was set to 64KB as TCP headers used a 16 bit field to communicate the current receive windows size to the sender; however it is now common practice to dynamically increase this value using a process called Window Scaling. Ideally, the Receive Window should be at least equal in size to the BDP.

TCP speed fluctuations

The congestion window is set by the sender and controls the amount of data in flight. The aim of the congestion window is to avoid network overloading; if there are no packets lost during transmission then the congestion window will continually increase over the course of the transfer. However, if packets are lost or the receiver window fills, the congestion window will shrink in size under the assumption that the capacity of either the network or receiver has been reached. This is why you will often see a TCP download increase in speed then suddenly slow again.

Further Reading:

UDP

When UDP (User Datagram Protocol) is used, the packets are sent ‘blind’. The transfer continues regardless of whether data is being successfully received or not. This potential loss may result in a corrupted file – in the case of a streamed video this could be some missing frames or out of sync audio, but generally it will require a file to be resent in its entirety. The lack of guarantee makes the transfer fast, but unless combined with rigorous error checking, it is often unsuitable for data transfers.

A select few vendors have built proprietary protocols based upon the open standard UDP to move data faster. A lot faster! Their protocols generally work the same way in that they maximise the utilisation of the bandwidth available to them by flooding the connection with data. Of course, controls are built in to ensure other network traffic doesn’t suffer. This approach can increase the speed by up to 1,000 times depending upon the network conditions and bandwidth available.

These UDP based solutions are now reaching a level of maturity enabling the software to be used in many scenarios, for example:

- Disaster recovery and business continuity

- Content distribution and collection, e.g., software or source code updates, or CDN scenarios

- Continuous sync – near real-time syncing for ‘active-active’ style HA

- Supports master-slave basic replication, but also more complex bi-directional sync and mesh scenarios

- Person to person distribution of digital assets

- Collaboration and exchange for geographically distributed teams

- File-based review, approval and quality assurance workflows

The fast file transfer solution

Implementing a managed file transfer solution, which has the ability to stream files to a target server, provides productivity gains over traditional store and forward-style workflows. By writing a large data set directly onto the intended target system, you’re able to remove a step in the process.

The world of managed file transfer has evolved to enable companies that need to move big data, to do so as efficiently as possible. Streamlining the delivery of data (of varying types, sizes and structures), from external trading partners, onto internal big data analytics solutions, is becoming a much more common requirement from our customers.

Another option that you can investigate is the ability of several products to perform multi-threading. Multi-threaded transfers theoretically move quicker than single-threaded transfers due to the ability to send multiple separate streams of packets; this negates somewhat the delays caused by having to resend packets in the event of loss. However the transfers may still be impacted by full receive windows or disk write speeds; in addition any file that has been sent via multiple threads needs to be reassembled on an arrival, requiring further resources. In general, most large-file transfer software is written around multi-threading principles or a blend of UDP transfer with TCP control.

Combining UDP and TCP

The best results for fast file transfer are gained from a combination of both UDP and TCP. UDP is used to transfer the data, whilst TCP is used to ensure that any packets lost are resent. An agent at the receiving station is used to reconstruct the data after the transfer.

Some examples of effective use of this method include:

- Banking, where vast amounts of data is captured and moved around each day.

- Researchers needing to share large volumes of scientific and clinical research data.

- The manufacturing industry, sharing large files and data sets to global development teams.

If you have any questions about the speed of your file transfers or your chosen file transfer technology and infrastructure design give our team of experts a call on 0333 123 1240.

Further Reading:

Take the risk out of selecting an MFT solution with our free, independent comparison service!

Our comparison report identifies the right solution for your needs and budget. Complete a series of questions and receive a bespoke product recommendation from our technical experts.